PostgreSQL Database Replication

Replication is crucial for any database server because downtime or data loss can severely impact accessibility, productivity, and user confidence. By replicating data from a primary server to one or more standby servers, the risk of data loss is significantly reduced. With PostgreSQL, setting up a database cluster in a Primary-Secondary configuration (formerly known as master-slave replication) is straightforward, allowing you to include one or more standby servers.

The most efficient replication method in PostgreSQL is using Write-Ahead Logging (WAL), which offers excellent performance through asynchronous replication. In this setup, the primary database server operates in archiving mode, continuously writing WAL files to storage and transferring them to the standby server. The standby server, running in recovery mode, promptly receives these files after they are written.

Now, let’s explore how to install and configure a Primary-Secondary PostgreSQL database cluster.

Creating PostgreSQL Primary-Secondary Cluster

The platform offers two automated methods for setting up a PostgreSQL cluster:

Pre-Packaged Marketplace Solution

The quickest and most straightforward way to create a PostgreSQL cluster is to use the pre-packaged solution from the marketplace.

1. Access the Marketplace

- Click the “Marketplace” button at the top-left of the dashboard.

- Search for the “PostgreSQL Primary-Secondary Cluster” package.

- Hover over the solution and click “Install” to proceed.

2. Configure the Cluster

- In the opened dialog, select your preferred PostgreSQL version.

- Optionally, enable Pgpool-II load balancers.

3. Wait for Setup

- Allow a few minutes for the platform to prepare your environment and configure the required replication settings.

Once the setup is complete, you’ll receive a notification with the necessary data for accessing the PostgreSQL administration interface. This information will also be sent via email.

Topology Wizard Auto-Clustering

The PostgreSQL database cluster can be enabled via the embedded Auto-Clustering feature on the dashboard. This method provides more customization options compared to the marketplace solution while still automating the configuration process.

1. Initiate Auto-Clustering

- Open the new environment topology wizard.

- Select the PostgreSQL database software stack.

- Toggle the dedicated Auto-Clustering switch. If desired, enable the Pgpool-II load balancer for your cluster.

Next, utilize the customization capabilities of the wizard to adjust the number of nodes per layer, allocate additional resources, and add other software stacks to your environment.

2. Create the Cluster

- When your configuration is set, click “Create.”

- Wait a few minutes for the platform to create your environment and set up the necessary replication configurations.

Managing PostgreSQL Cluster

Below is some helpful information on managing a PostgreSQL cluster:

- Cluster Entry Point

- Cluster Admin Panels

- Primary PostgreSQL Configuration

- Configuring Standby

- Replication Check

- Automatic Failover Scenario

- Manual Failover Scenario

Cluster Entry Point

If Pgpool-II nodes were not included in the cluster topology, use the Primary node to access the cluster. If the load balancing layer is deployed in front of the database cluster, you can use any of the Pgpool-II nodes as the entry point.

Cluster Admin Panels

In PaaS, the PostgreSQL cluster components can be managed either via CLI or UI.

Database Management

- phpPgAdmin: Database nodes have a built-in management administration panel called phpPgAdmin. This panel is accessible only on the Primary node.

Pgpool-II Management

Pgpool-II nodes can be managed via a user-friendly built-in Administration Panel called pgpoolAdmin. This panel allows you to configure:

- Load Balancing and Distribution: Manage how requests to each database should be processed and balanced.

- Connection Pools: Optimize database connections for better performance.

- Logging: Configure logging settings for monitoring and troubleshooting.

- Replication: Set up and manage replication settings.

- Debugging: Tools and settings for debugging issues.

- Failover and Failback: Configure and manage automatic failover and failback processes for high availability.

Primary PostgreSQL Configuration

Let’s take a look at the primary node configuration parameters used in auto-clustering.

1. Locate the Primary Node: In your environments list, find the environment containing the primary database. Click the Config button next to the PostgreSQL Primary node.

2. Open Configuration File: Navigate to the conf directory and open the postgresql.conf file.

Modify WAL Settings: The following lines related to the Write-Ahead Logging (WAL) files can be changed if necessary:

wal_level = hot_standby

max_wal_senders = 10

archive_mode = on

archive_command = 'cd .'Where:

- wal_level: This parameter determines the amount of information written to the WAL. There are three possible values:

- minimal: Only the information necessary to recover from a failure or emergency shutdown.

- replica: The default value, which writes enough data to support WAL archiving and replication, including running read-only queries on the standby server. In releases prior to 9.6, the values archive and hot_standby were also allowed and mapped to replica.

- logical: Adds the information required to support logical decoding to the replica logging level.

- max_wal_senders: Sets the maximum number of simultaneously running WAL transfer processes.

- archive_mode: Enables WAL archiving in conjunction with the wal_level parameter (all values except minimal enable archiving).

- archive_command: Specifies the local shell command to archive the completed WAL segment. By default, it does nothing (cd .). You can change this to copy WAL archives to a preferred destination directory. For example:

archive_command = 'test ! -f /var/lib/pgsql/data/pg_wal/%f && cp %p /tmp/mydata/%f'Press the Save button above the editor.

3. Open the pg_hba.conf configuration file. The standby database connection is permitted by stating the following parameters:

host replication all {standby_IP_address}/32 trustThat’s all for primary! Let’s proceed to the standby server’s configuration.

Configuring Standby

1. Open the postgresql.conf file, find the Standby Servers section. As you can see this server is acting as standby since the hot_standby parameter is on unlike the primary node where this parameter is commented out.

2. Scroll down to the end of the config file. There is a primary_conninfo parameter that specifies the connection string which the standby server will use to connect to the sending server. The connection string must indicate the host name (or address) of the sending server, as well as the port number. The username corresponding to the role with the appropriate privileges on the sending server is also provided. The password must also be specified in the primary_conninfo or in a separate ~/.pgpass file on the backup server if the sender requires password authentication.

primary_conninfo = 'host=primary_server_ip port=5432 user=replication_user password=your_password'3. The last option that makes database server as secondary is standby.signal file availability, which indicates the server should start up as a hot standby. File must be located in the PostgreSQL data directory and it can be empty or contain any information. Once a secondary is promoted to primary this file will be deleted.

Note: Remember that when modifying certain options, the server may need to be restarted. You can do this in two ways:

- From the dashboard, you have the option to restart either one or both nodes.

- Alternatively, you can use the command line interface via the Web SSH client. To do this, click on the Web SSH button for the desired node, such as the secondary.

sudo service postgresql restartReplication Check

1. Access the phpPgAdmin panel for your primary database by clicking the “Open in Browser” button next to it.

2. Log in using the database credentials you received via email earlier, and proceed to create a new database.

3. Next, open the admin panel of your standby database server (similarly to how you accessed the primary one) and verify if the new database has been replicated successfully.

Automatic Failover Scenario

Automatic failover in the PostgreSQL cluster relies on the Pgpool-II node and is activated when it detects the primary database’s failure. This feature is unavailable in topologies lacking Pgpool-II, necessitating manual configuration. Upon identifying a primary database outage, the load balancing node promotes a standby to the primary role. Upon the primary’s recovery, it automatically rejoins the cluster as a standby, with missing data restored via the pgrewind utility.

Manual Failover Scenario

PostgreSQL lacks native automatic failover capabilities, but third-party solutions offer options for ensuring high availability. Alternatively, users can devise custom failover solutions to address database cluster failures. While numerous failure scenarios exist, we focus on a common workflow for automating failover.

The default setup comprises two nodes:

- Primary Node

- Standby Node

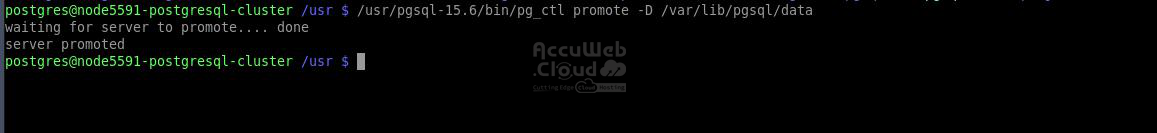

In the event of primary node failure, the standby must be promoted to primary status. This can be achieved using the pg_ctl utility, employed for initializing, starting, stopping, or controlling a PostgreSQL server. To execute this, log in to the standby server via Web SSH and issue the following command:

/usr/pgsql-15.6/bin/pg_ctl promote -D /var/lib/pgsql/dataWhere /var/lib/pgsql/data represents the database data directory.

Upon promoting the secondary database to primary status, it’s imperative to update the application connection string to reflect the new hostname or IP address, ensuring seamless cluster entry point transition.

For failover management, the pg_isready utility is invaluable, performing connection checks on the PostgreSQL database.

A simple script can automate the failover process, periodically checking the primary database server’s availability and promoting the standby node in case of primary failure. This script, named failover.sh, can be executed via a crontab on the secondary node at specified intervals. Here’s an example script implementation:

#!/bin/bash

primary="10.100.2.214"

secondary="10.100.2.134"

status=$(/usr/pgsql-15.6/bin/pg_isready -d postgres -h $primary)

response="$primary:5432 - no response"

if [ "$status" == "$response" ]

then

/usr/pgsql-15.6/bin/pg_ctl promote -D /var/lib/pgsql/data

echo "Secondary promoted to new Primary. Change your app connection string to new Primary address $secondary"

else

echo "Primary is alive. Nothing to do."

fiCluster Restoration

With a new primary address you can easily avoid tuning your application connection string by changing the IP addresses of the primary database. To do this, you have to put a load balancer in front of the cluster that will monitor the status of its components and route traffic to the current primary. Below, we will demonstrate how to restore the original cluster topology so that no changes will be required at the frontend.

Another reason the topology should be restored is related to ensuring the scalability of the cluster. Only the original topology can be scaled in/out horizontally.

Let’s see how to perform PostgreSQL database cluster restoration after the former primary was dropped off from the cluster and the former secondary was promoted to the primary.

So, the task is: the dropped-off primary should become the actual primary, and the current primary (former secondary) should become the actual secondary.

The initial data are:

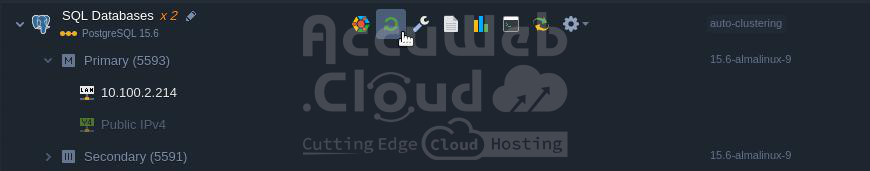

Database cluster comprises two nodes, primary (IP: 10.100.2.214) and secondary (IP: 10.100.2.134). Primary node went down and the primary database was stopped. Standby database was promoted to the primary role. Now the secondary retains the reads/writes. Former primary node was fixed and is ready to be reintroduced to the replication as primary. Do the steps as follows to get the cluster back to the initial topology:

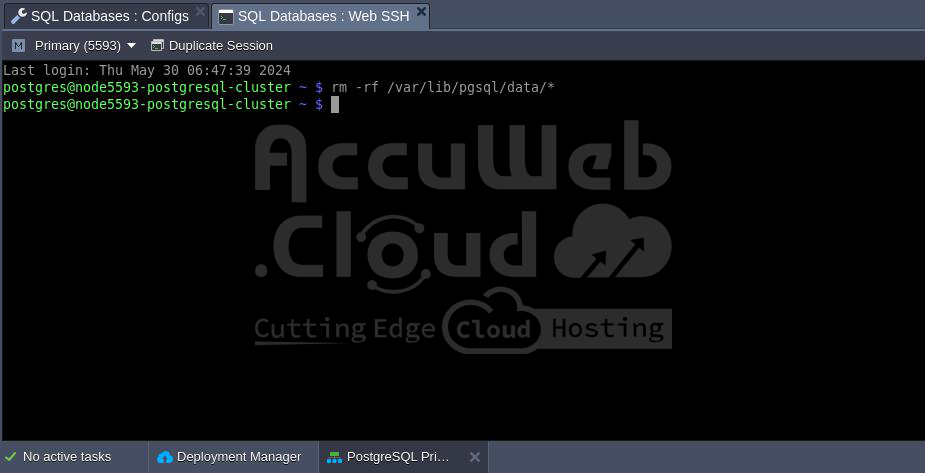

1. Enter the former primary node via Web SSH and issue the command:

rm -rf /var/lib/pgsql/data/*2. Add the former primary IP address (10.100.2.214) to the pg_hba.conf file on the current primary node:

host replication replication 10.100.2.214/32 trustRestart the current primary database to apply the changes:

sudo service postgresql restart3. Access the former primary node via Web SSH and execute the following command:

pg_basebackup -U replication -h 10.100.2.214 -D /var/lib/pgsql/data -Fp -Xs -P -RWhere:

- pg_basebackup – is used to take base backups of a running PostgreSQL database cluster.

- 10.100.2.214 – IP address of the current primary node.

- /var/lib/pgsql/data – PostgreSQL data directory.

4. Ensure the IP address in the host parameter described in the second step of Configuring Standby contains the proper IP address of the former primary.

5. Create the standby.signal file at the current primary:

touch /var/lib/pgsql/data/standby.signalRestart the node to convert it to a new secondary database:

sudo service postgresql restartRemove the standby.signal file at the former primary:

rm /var/lib/pgsql/data/standby.signalRestart the node to convert it to a new primary database:

sudo service postgresql restart6. Finally, to ensure a consistent recovery state for both primary and standby databases, a final restart is required. Restart the PostgreSQL nodes again.

Once the restart process is completed, the cluster will return to its original topology and can be scaled horizontally.