Varnish

Designed for dynamic websites with heavy traffic, Varnish is an HTTP reverse proxy and web application accelerator. Varnish, in contrast to other proxy servers, was first developed especially for HTTP.

But with this platform’s implementation, the NGINX server—which runs on port 443 as an HTTPS proxy—comes packed with Varnish, enabling it to process secure data and make use of capabilities like Custom SSL. In this configuration, NGINX decrypts incoming traffic before redirecting it to Varnish, which is executing on port 80, for additional processing.

With its rudimentary load balancing functionality, round-robin and random redirection options, backend health checking, and other features, Varnish functions as an accelerator.

Its main goal is to speed up web pages as much as possible. Caching helps with this by managing the delivery of static objects.

Furthermore, Varnish is a software that can be customized with many different modules. These modules include a robust live traffic analyzer called Varnishlog and statistics tools like Varnishstat and Varnishhist.

Every client connection on this heavily threaded server is handled by a different worker thread. Incoming connections are queued for overflow when the configured number of active worker threads is reached. We’ll reject more inbound connections if this queue gets too big.

To use Varnish in your environment as a load balancer, just follow the simple instructions listed below.

Setting Up Varnish as a Load Balancer

Step 1. Sign in to the PaaS dashboard using your credentials.

Step 2. Click on the New Environment button located in the upper left corner.

Learn more about how to create and manage a new environment with AccuWeb.Cloud

Step 3. In the programming language tab of your choice, open the Balancing wizard section and select Varnish from the drop-down list.

Make any necessary extra configurations (adding app servers and other instances, enabling external IPs for nodes, modifying resource limitations using cloudlet sliders, etc.).

Next, click the Create button after giving your new environment a name (such as “varnish”).

Step 4. Your environment will be created in just a few minutes.

That’s all you need to know about installing Varnish! Now you can move on to configuring it.

Configuring Your Varnish Server

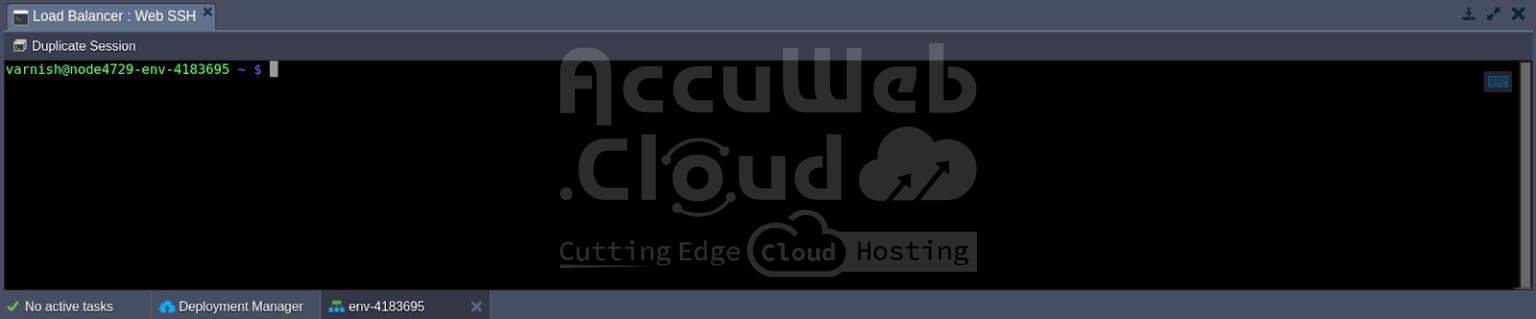

You can customize the Varnish load balancer by accessing the necessary server through the platform’s SSH Gateway.

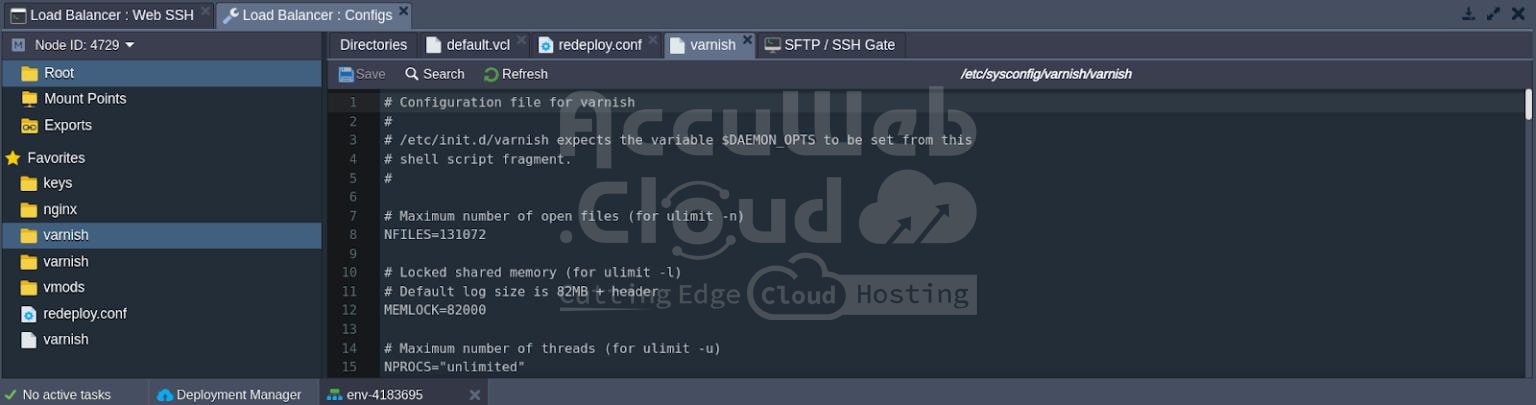

Alternatively, you can use the built-in file manager to edit the configuration files for the Varnish load balancer.

Here are some examples of configurations you can perform directly through the dashboard:

Step 1. Link servers (even from other environments) are to be placed as backends of this load balancer. Add a new record at the beginning of the /etc/varnish/default.vcl file, similar to the one below:

backend server_identifier { .host = "server_intenal_ip"; .port = "80"; }Substitute the following values with your custom ones:

- server_identifier: Any preferred name for the linking server.

- server_internal_ip: Address of the required server, which can be found by selecting the “Additionally” button next to it.

After that, add another string a bit lower in the sub vcl_init section under the new myclust = directors.hash(); line in the following format:

myclust.add_backend(server_identifier, 1);In this case, make sure to specify the server_identifier value, which should match the one used in the previously added string. You can refer to the image above for an example.

After completing these configurations, remember to save the changes you made and restart the load-balancer server to apply them.

Step 2. Additionally, you can add custom Varnish modules to your server by uploading them into the vmods folder.

Step 3. Customize the initial parameters of the Varnish daemon by modifying the settings in the /etc/sysconfig/varnish configuration file, which are read every time the balancer starts up.

With this article, we hope you can customize your Varnish load balancer to meet your needs.