TCP Load Balancing

Load balancing increases system availability by distributing workloads across several components, increasing reliability through redundancy. The platform uses NGINX for both TCP and HTTP load balancing.

TCP load balancing can manage requests for databases, mail servers, and other networked applications. It offers faster performance than HTTP by skipping the request-handling process. A client request via a network socket is directed by the TCP load balancer to an appropriate node using the round-robin algorithm.

All subsequent requests follow the same connection to the selected node, keeping the specific instance hidden from the application. Connections persist unless disrupted by issues like network failures, after which a new connection is made, possibly to a different node.

Step 1. Establish an environment with two or more application servers. NGINX will be integrated automatically, but remember to activate the Public IP for your NGINX node. For example, here we are using the TOMCAT application server. Enable the Public IP4 slider as shown below. Select “Create” environment.

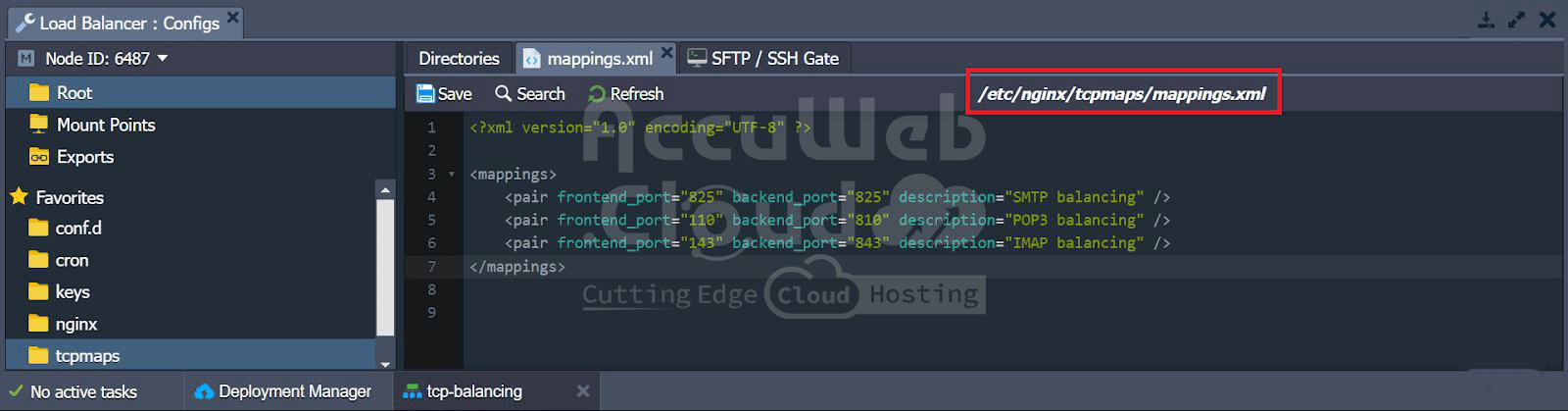

Step 2. Select the “config” option next to your load balancer.

Step 3. Navigate to the tcpmaps directory and open the mappings.xml file. Define the frontend and backend ports: the frontend is where users connect, and the backend is where the balancer forwards requests. Save the modifications once done.

Step 4. Restart your NGINX node. You can also check logs to confirm if the node has restarted.

In summary, TCP load balancing with NGINX ensures efficient distribution of requests across servers, optimizing system performance and reliability.

Configuring frontend and backend ports in mappings.xml enhances control over request routing while monitoring NGINX logs, facilitating timely adjustments and maintenance.