Multi-Region Kubernetes Cluster Federation in AccuWeb.Cloud PaaS

Managing applications across multiple regions can be challenging. If your business operates in different geographies or needs high availability, ensuring consistency across clusters is critical. That’s where Kubernetes Cluster Federation (KubeFed) comes in.

With AccuWeb.Cloud, you can set up a multi-region Kubernetes federation in just a few steps, giving you a single point of control for all your clusters.

What is the Kubernetes Cluster Federation (KubeFed)?

Kubernetes Federation links multiple Kubernetes clusters together and allows you to:

- Deploy applications centrally across multiple clusters.

- Manage workloads consistently across regions or clouds.

- Improve reliability & availability with geo-distributed clusters.

- Simplify scaling by defining replica rules across environments.

Think of it as having one “control tower” for all your clusters, no matter where they’re hosted.

Federation Prerequisites

Imagine you have five clusters in different regions within a single AccuWeb.Cloud PaaS environment, and you want to deploy applications to any of these clusters. One cluster serves as the Host Cluster, acting as the Federation Control Plane, pushing configurations to the Member Clusters.

To start, you need to determine which payload to distribute and which member clusters will handle it.

Let’s dive in and set up a Federation in AccuWeb.Cloud PaaS.

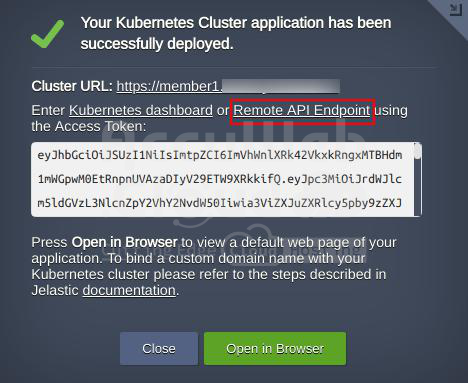

First, sign in to your account and create two Kubernetes clusters in different regions. You can create more if needed, but for this example, we’ll create one Host Cluster and one Member Cluster 1. The steps below can be applied to any number of Member Clusters. Deploy the following:

- Federation Host Cluster: fedhost.vip.jelastic.cloud

- Federation Member Cluster: member1.demo.jelastic.com

Setting Up Remote Access to the Clusters

Next, establish remote access to the clusters.

Log in to the master node of the Host Cluster via SSH and start configuring it. You will see some command outputs to confirm you are on the right track.

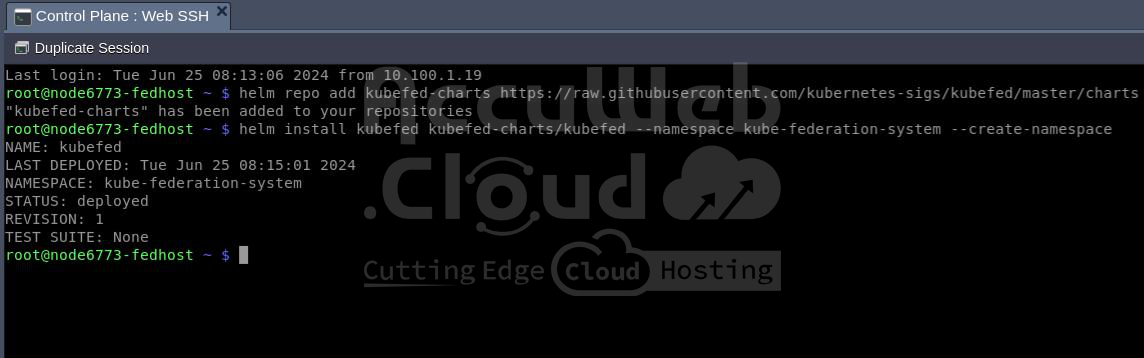

1. First, install the KubeFed chart with Helm in the kube-federation-system namespace:

Add repository:

fedhost~$ helm repo add kubefed-charts

https://raw.githubusercontent.com/kubernetes-sigs/kubefed/master/charts

To install the latest version of KubeFed, follow these steps. We’ll use version 0.7.0 as an example:

fedhost~$ helm install kubefed kubefed-charts/kubefed --version 0.7.0 --namespace kube-federation-system --create-namespace

2. Download the latest kubefedctl command-line tool and copy it to the /usr/local/bin directory.

fedhost~$ wget

https://github.com/kubernetes-sigs/kubefed/releases/download/v0.7.0/kubefedctl-0.7.0-linux-amd64.tgz

fedhost~$ tar xvf kubefedctl-0.7.0-linux-amd64.tgz

fedhost~$ mv kubefedctl /usr/local/bin

3. To enable KubeFed to manage deployments, it needs to interact with all selected Member clusters. You can achieve this by using the following RBAC configuration file to create the necessary role for connection from the Host cluster. Log in to the master node of the Member cluster via SSH, create a configuration file (e.g., member1.yaml), and paste the provided content into it.

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

name: member1

name: member1

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

name: member1

name: member1

rules:

- apiGroups: ['*']

resources: ['*']

verbs: ['*']

- nonResourceURLs: ['*']

verbs: ['*']

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

labels:

name: member1

name: member1

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: member1

subjects:

- kind: ServiceAccount

name: member1

namespace: default

Apply the configuration file.

member1~$ kubectl apply -f member1.yaml

4. To access all member clusters, create a context for each one. Each context should include the Kubernetes Cluster name, cluster endpoint, username with credentials, and namespace.

The credentials include:

- RBAC token

- Certificate

Get the token name of the newly created service account (e.g., member1).

member1~$ kubectl get secret | grep member1

Retrieve its content, and copy it to the clipboard.

member1~$ kubectl describe secret member1-token-zkctp

5. Create a user (e.g., kubefed-member1) and provide the token from the clipboard.

fedhost~$ kubectl config set-credentials kubefed-member1

--token='eyJhbGciOiJSUzI1Ni…….JYNCzwS4F57w'

6. Retrieve the member cluster endpoint that the host cluster will connect to.

member1~$ cat /root/.kube/config | grep server

Set up the cluster name (e.g., kubefed-remote-member1) and add the member cluster endpoint.

fedhost~$ kubectl config set-cluster kubefed-remote-member1

--server='https://k8sm.member1.demo.jelastic.com:6443'

fedhost~$ kubectl config set-cluster kubefed-remote-member1

--server='https://member1.demo.jelastic.com/api/'

7. Retrieve the certificate, copy its content to the clipboard.

member1~$ cat /root/.kube/config | grep certificate-authority-data

Replace <certificate> with the clipboard value in the context.

fedhost~$ kubectl config set clusters.kubefed-remote-member1.certificate-authority-data '<certificate>'

fedhost~$ kubectl config set clusters.kubefed-remote-member1.insecure-skip-tls-verify true

8. Finally, describe the context for the Member cluster using the correct cluster name, context name, and username:

fedhost~$ kubectl config set-context member1 --cluster=kubefed-remote-member1 --user=kubefed-member1 --namespace=default

Repeat steps 3-8 for each Member cluster in the federation.

fedhost~$ kubectl config rename-context kubernetes-admin@kubernetes fedhost

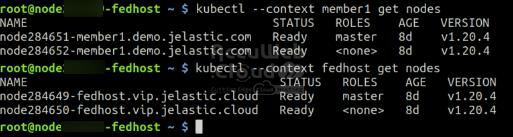

Cluster Remote Access Testing

The configuration file /root/.kube/config now includes the Member Clusters in the Host Cluster setup. If everything is configured correctly, you can interact with any cluster by selecting the appropriate context. For instance, let’s view the list of nodes in both the kubefed-remote-member1 and fedhost clusters:

fedhost~$ kubectl --context member1 get nodes

fedhost~$ kubectl --context fedhost get nodes

Joining the Federation

With communication established between two clusters in different regions, we can use the kubefedctl tool to join them to the Federation.

Add the Host Cluster to the Federation:

fedhost~$ kubefedctl join fedhost --v=2 --host-cluster-context fedhost

--kubefed-namespace=kube-federation-system

Add the Member Cluster to the Federation:

fedhost~$ kubefedctl join member1 --v=2 --host-cluster-context fedhost

--kubefed-namespace=kube-federation-system

If everything goes smoothly without errors, you should see an output similar to this:

To verify that the join was successful, you can check the status of the Federation using this command:

fedhost~$ kubectl -n kube-federation-system get kubefedclusters

Congratulations! You now know how to create a Kubernetes Federation across multiple regions using AccuWeb.Cloud PaaS service.

People Also Ask (And You Should Too!)

Q1. What is Kubernetes Cluster Federation?

Kubernetes Cluster Federation (KubeFed) is a feature that allows you to manage multiple Kubernetes clusters as a single entity. It enables centralized deployments, consistent policies, and workload distribution across regions or cloud providers.

Q2. Why should I use Kubernetes Federation instead of a single cluster?

A single cluster is limited to one region, which can create latency or downtime risks. Federation ensures high availability, geo-redundancy, and reduced latency by distributing workloads across clusters in multiple regions.

Q3. How does Kubernetes Federation work in AccuWeb.Cloud?

AccuWeb.Cloud’s Kubernetes (AK8s) platform simplifies federation by letting you:

- Provision clusters in different regions

- Connect them through federation

- Define deployment rules and scaling policies

- Automatically balance workloads across federated clusters

Q4. What are the benefits of multi-region Kubernetes Federation?

- Disaster recovery across regions

- Global scalability with workloads closer to users

- Centralized policy management

- Zero-downtime deployments in case of regional failures

Q5. Can I use Kubernetes Federation for hybrid or multi-cloud environments?

Yes. Kubernetes Federation supports clusters across different regions, data centers, and cloud providers, making it an excellent fit for hybrid or multi-cloud strategies.

Q6. Is Kubernetes Federation suitable for small businesses?

Yes. Even smaller teams benefit from federation, especially if they need business continuity, faster app delivery, or low-latency services for global users.

Q7. What are common use cases for Kubernetes Federation?

- SaaS platforms running across regions

- E-commerce sites needing zero downtime

- Streaming and gaming platforms with global users

- Enterprises building disaster-resilient architectures

Q8. How do I get started with Kubernetes Federation on AccuWeb.Cloud?

Sign up on AccuWeb.Cloud, provision Kubernetes clusters in multiple regions, and enable federation through the dashboard. Once linked, you can deploy apps centrally and let federation manage replica distribution automatically.

Jilesh Patadiya, the visionary Founder and Chief Technology Officer (CTO) behind AccuWeb.Cloud. Founder & CTO at AccuWebHosting.com. He shares his web hosting insights on the AccuWeb.Cloud blog. He mostly writes on the latest web hosting trends, WordPress, storage technologies, and Windows and Linux hosting platforms.