Explain Server Clustering With its Types and Benefits

As global technology advances, the need for improved data center efficiency and optimization is on the rise. These advancements have given rise to the server clusters concept that offers several advantages over traditional data center servers. These benefits encompass improved scalability, heightened availability, reliability, and redundancy.

Clustering servers into multiple nodes provides higher reliability to users, optimized load distribution, system scalability, and increased availability compared to relying on a single server. Clustered servers find applications in various domains, including printing, databases, file sharing, and messaging.

One noteworthy advantage of clustered servers is data protection, as these servers operate cohesively within a cluster, ensuring the ongoing consistency of the cluster configuration over time.

What is a Server Cluster?

A server cluster comprises a group of interconnected servers working together to enhance system efficiency, distribute workloads, and ensure service availability, all coordinated and controlled by a single IP address.

Every server is a node with its hard disk, RAM, and CPU for processing data. In addition, it will perform “failover clustering,” i.e., if one server fails, the active tasks will be handed over to another server that continues operation with less downtime.

Types of Server Clusters

1. High Availability Server Cluster

High-traffic websites benefit from high-availability (HA) clusters, as they construct these clusters with redundant hardware and software to prevent single points of failure. They are essential for failover, system backups, and load balancing. They consist of many hosts that can take over if a server fails; if a server fails or is overloaded, this ensures little downtime.

High Availability servers have two types of architecture:

1.1 Active-Active High Availability Cluster

An active-active cluster typically comprises at least two nodes, both concurrently running the same type of service. The primary goal of such a cluster is to attain load balancing, a mechanism that evenly distributes workloads across all nodes to avoid overloading a single node. Therefore, this expanded node capacity results in a notable enhancement in both throughput and response times.

For example, in the “Round Robin” algorithm, the sequence of client connections follows this pattern –

i) The first client connects to the first server

ii) The second client connects to the second server

iii) The third client returns to the first server.

iv) The fourth client returns to the second server, and this rotation continues.

1.2 Active-Passive High Availability Cluster

Like the active-active cluster setup, an active-passive cluster also involves at least two nodes. However, as the term “active-passive” suggests, not all nodes are simultaneously active. In a two-node scenario, for instance, when the first node is “active,” the second node operates in a passive or standby mode.

The passive (secondary) server functions as a standby backup, ready to take over the active (primary) server’s responsibilities instantly in case of disconnection or when the active server becomes incapable of serving. This represents an active-passive failover mechanism designed for instances when a node experiences a failure.

When clients establish connections with a two-node cluster operating in an active-passive configuration, they exclusively connect to one server; in simple terms, all clients link to the same server. As in the active-active configuration, both servers must maintain identical settings, ensuring redundancy.

If alterations are applied to the settings of the primary server, these modifications must also be synchronized with the secondary server. This way, when the failover eventually takes charge, clients will not discern any differences in their interactions.

Hot Standby

The primary node’s database is a “hot standby” or hot spare on the secondary server. It is less expensive to install hot standby than active-active since it is prepared to take over if a component fails.

High-availability clusters increase reliability while facilitating simple scaling. They also provide more effective maintenance and strong infrastructure security. These clusters enable cost savings, reduced downtime, and improved user experiences.

Benefits

Availability: By forwarding user requests to different nodes if one node is already busy, high-availability server clusters increase the availability of your hosted services.

Scalability: In the event of a load spike, such as an increase in network traffic or increased demand for resources, the HA system should be able to scale to meet these demands instantly. By integrating scalability capabilities into the system, it would rapidly adapt to any changes affecting its service request processing.

Resource optimization: High Availability (HA) clusters contribute to resource optimization by effectively distributing workloads and resources among multiple nodes.

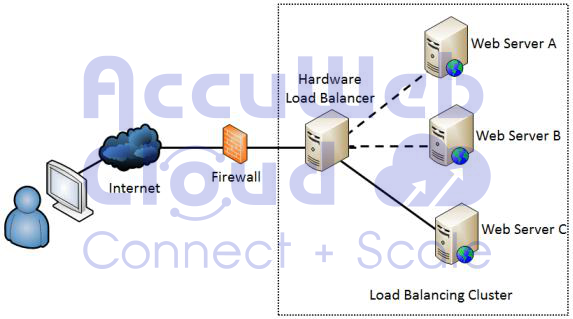

2. Load Balancing Cluster

A load-balancing cluster is a server farm that distributes user requests among several active nodes. The key advantages include redundancy, speeding operations, and improving workload distribution.

Distributing workloads among servers and separating functions through load balancing maximizes resource utilization. It routes requests to various servers using load-balancing software based on an algorithm. The program also manages responses that are sent out.

In a high-availability cluster’s active-active architecture, load balancers are used. The HA cluster uses the load balancer to respond to various requests and distribute them to several servers. Depending on configuration data and computer performance, the distribution may be symmetrical or asymmetrical.

The load balancer monitors the availability of nodes in an active-passive high-availability cluster. Until a node is fully functional, it stops sending traffic if it shuts down.

Data centers and telecommunications companies mostly use this architecture. The key advantages of a load-balancing design are cost savings, improved scalability, and optimization of high-bandwidth data transfer.

Benefits

Fault Tolerance: Load balancers constantly monitor the condition of individual nodes. If a node experiences a failure, the load balancer automatically redirects traffic to operational nodes, thereby reducing service interruptions and strengthening fault tolerance.

Geographic Load Distribution: Load balancers can distribute traffic among geographically dispersed data centers or server locations, enhancing user response times in various regions.

Traffic Prioritization: Load balancers can prioritize specific types of traffic, guaranteeing that essential applications receive the resources and bandwidth required for optimal performance.

3. High-Performance and Clustered Storage

High-performance clusters, called supercomputers, provide more performance, capacity, and dependability. Businesses with resource-intensive tasks tend to use them the most.

A high-performance cluster comprises numerous computers connected by a common network. Furthermore, you can combine several clusters to data storage facilities to handle data effectively. In other words, you achieve smooth performance and high-speed data transfers by utilizing high-performance data storage clusters.

People frequently use these clusters with Internet of Things (IoT) and artificial intelligence (AI) technologies. They handle massive amounts of real-time data to fuel initiatives like real-time streaming, storm forecasting, and patient diagnosis. Media, finance, and research commonly use high-performance cluster applications.

Benefits

Better performance for big data and analytics: High-performance storage clusters handle extensive data for big data analytics, machine learning, and data-intensive workloads.

Enhanced Data Security: Advanced clustered storage systems often feature strong access controls, ensuring authorized users can access sensitive data.

4. Clustered Storage

A minimum of two storage servers are needed for clustered storage. They allow you to enhance your system’s functionality, dependability, and node space input/output (I/O).

Depending on your business and storage needs, you can select a tightly coupled or loosely coupled architecture.

Primary storage is the focus of a tightly coupled architecture; it divides data into small blocks.

A self-contained and loosely coupled architecture provides more flexibility. This architecture does not store data across nodes, but the capabilities of the node storing the data limit its performance and capacity. It’s impossible to scale using new nodes in a loosely linked architecture like this, unlike in a tightly connected architecture.

Benefits

High Performance:

Clustered storage often suits high-performance computing (HPC) applications, where hundreds, thousands, or tens of thousands of clients actively perform data read and write operations on massive datasets.

Efficient Data Management: Clustered storage systems provide advanced data management capabilities, including tiering, ensuring high-speed storage media hosts frequently accessed data. In contrast, slower storage media hosts less frequently used data. This functionality optimizes the utilization of costly storage resources while delivering expedited access to often utilized data.

Scalability: Clustered storage empowers organizations to expand their storage infrastructure by incorporating additional servers into the cluster as their storage demands increase. This prevents replacing current hardware and presents a cost-efficient strategy for augmenting storage capacity.

Conclusion

Adopting server clusters is a strategic move for organizations in today’s digital era. These clusters provide robust solutions, ensuring high availability, efficient resource utilization, and enhanced reliability. Server clusters are crucial for optimizing web services, managing extensive data, and ensuring fault tolerance in the competitive online landscape, where cost savings and performance are top priorities.

Jilesh Patadiya, the visionary Founder and Chief Technology Officer (CTO) behind AccuWeb.Cloud. Founder & CTO at AccuWebHosting.com. He shares his web hosting insights on the AccuWeb.Cloud blog. He mostly writes on the latest web hosting trends, WordPress, storage technologies, and Windows and Linux hosting platforms.